All coded in C++ using Vulkan as the graphics API, and GLFW for windowing. GitHub link.

Intro

Inspired by the custom engine created for the game Noita, I decided to look into the possibilities of creating a 3D variant akin to their engine. During my research I found that the use of Voxels in video games are heavily under researched, and also investigated other methods for destructibility and various techniques for rendering voxels. After having completed this review of the literature I began working on the artefact:

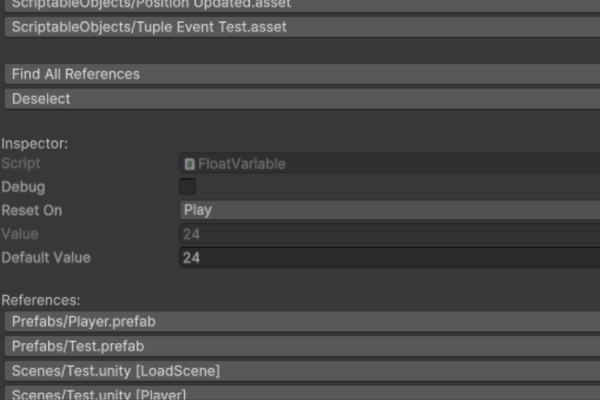

Current Features

- Vulkan renderer with added Dear ImGui render pass.

- Compute shader to voxelise an input 3D model.

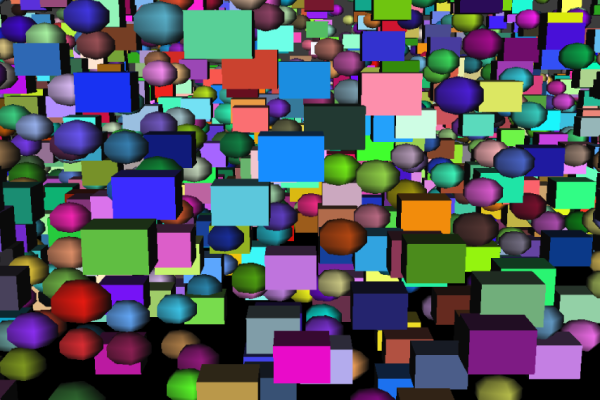

- Geometry shader that takes the voxel points and outputs the primitives necessary for a cube.

- A simple CPU based simulation with constraints, collision and physics resolution between voxels.

- Option to extract surface voxelisation for relevant models (like teapot).

Future Work

- Improve the functionally of the voxel simulation.

- Potentially port the simulation to the GPU for better performance

- Switch from geometry shader, to raytracing for more scalable rendering performance.

- Profile and optimise the code.

- Store voxelisation in a sparse voxel octree and serialise to disk.

Interesting Problems

So far this project has been a massive undertaking, as before this I had only limited experience in using Vulkan from preparation work I did in the summer before my final year started. In particular, I had also never touched upon compute or geometry shaders, which are currently crucial to the workings of my program. Otherwise, it’s been a reasonable challenge to keep everything well structured in order to make it easy for myself to add further functionality to the program, and to reduce the compile time of the program.

One of the problems I came across was when porting the voxelisation code from the CPU to the GPU. More specifically, the algorithm requires every query point (voxel centre) to loop over every triangle in the model, meaning I needed to pass this data to the compute shader to be operated upon. When trying to implement this I had an issue where the shader storage buffer object had a required minimum alignment of 16 bytes, but the index buffer I was passing to the compute shader only had a per element alignment of 4 bytes (a single uint32_t). To not waste lots of memory when passing these values to the shader I didn’t want to add 12 bytes of padding to each index, as well I knew that what I cared about was the indices of every triangle. Knowing this, I changed my implementation to pass the 3 indices of a triangle with a 4 byte padding to the shader instead.

Another problem I had early in development, was due to my lack of knowledge in the ways geometry shaders worked, as after having changed it around to emit a quad for every point as test, I was having issues with the colours of the quad flickering. Naturally I assumed it was a synchronisation issue, like flickering usually can be, and went through double checking my points of synchronisation in my draw calls and pipeline to no avail. I left this unsolved for a while, as I could not use RenderDoc from the University computers to debug Vulkan projects, but as soon as I got to my home computer I used RenderDoc to debug my pipeline and discovered the issue was my incorrect assumption that I could set the output colour for the emitted vertices of the geometry shader once across each emit vertex call, as debugging showed my geometry shader’s output colours only being set for the first vertex.